Performance optimization on High Performance Computing (HPC) and Artificial Intelligence/Deep Learning (AI/DL) applications is a critical research area aimed at enhancing the efficiency and effectiveness of computational workflows. In this field, researchers focus on developing novel algorithms, tools, and methodologies to maximize the utilization of computing resources while minimizing execution time and energy consumption.

This involves fine-tuning software implementations, leveraging parallel computing architectures, exploiting hardware accelerators like GPUs and TPUs, and employing advanced optimization techniques such as algorithmic restructuring and data prefetching. By optimizing performance in HPC and AI/DL applications, researchers strive to enable faster simulations, more accurate predictions, and ultimately, groundbreaking discoveries in science, engineering, and technology.

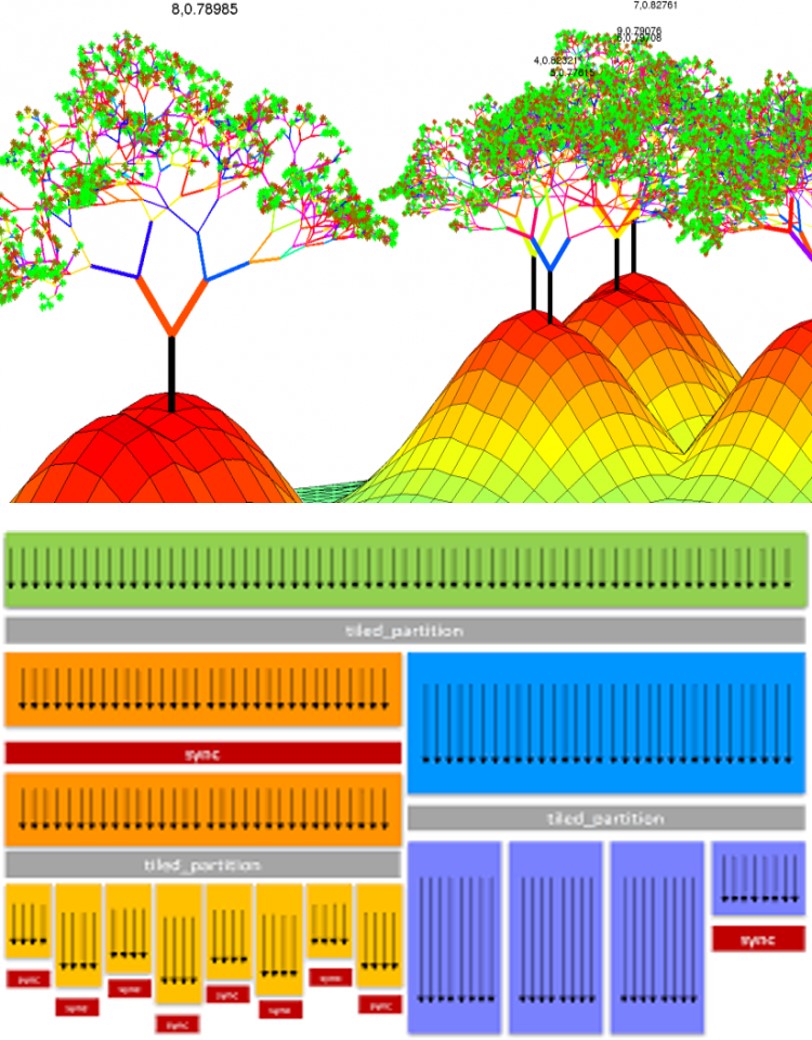

Parallel computing on various architectures encompasses a broad research domain dedicated to harnessing the computational power of diverse hardware platforms for solving complex problems efficiently. Researchers in this field explore the design and optimization of parallel algorithms tailored to exploit the unique features and capabilities of different architectures, including multi-core CPUs, GPUs, FPGAs, and specialized accelerators. They investigate techniques for load balancing, task scheduling, and communication optimization to maximize parallel efficiency and scalability across diverse computing environments.

Additionally, researchers explore programming models and languages that abstract the complexities of parallel programming, enabling developers to efficiently leverage parallelism without extensive hardware expertise. Through advancements in parallel computing on various architectures, researchers aim to unlock unprecedented performance gains, enabling the rapid execution of large-scale simulations, data analytics, and scientific computations across a wide range of application domains.

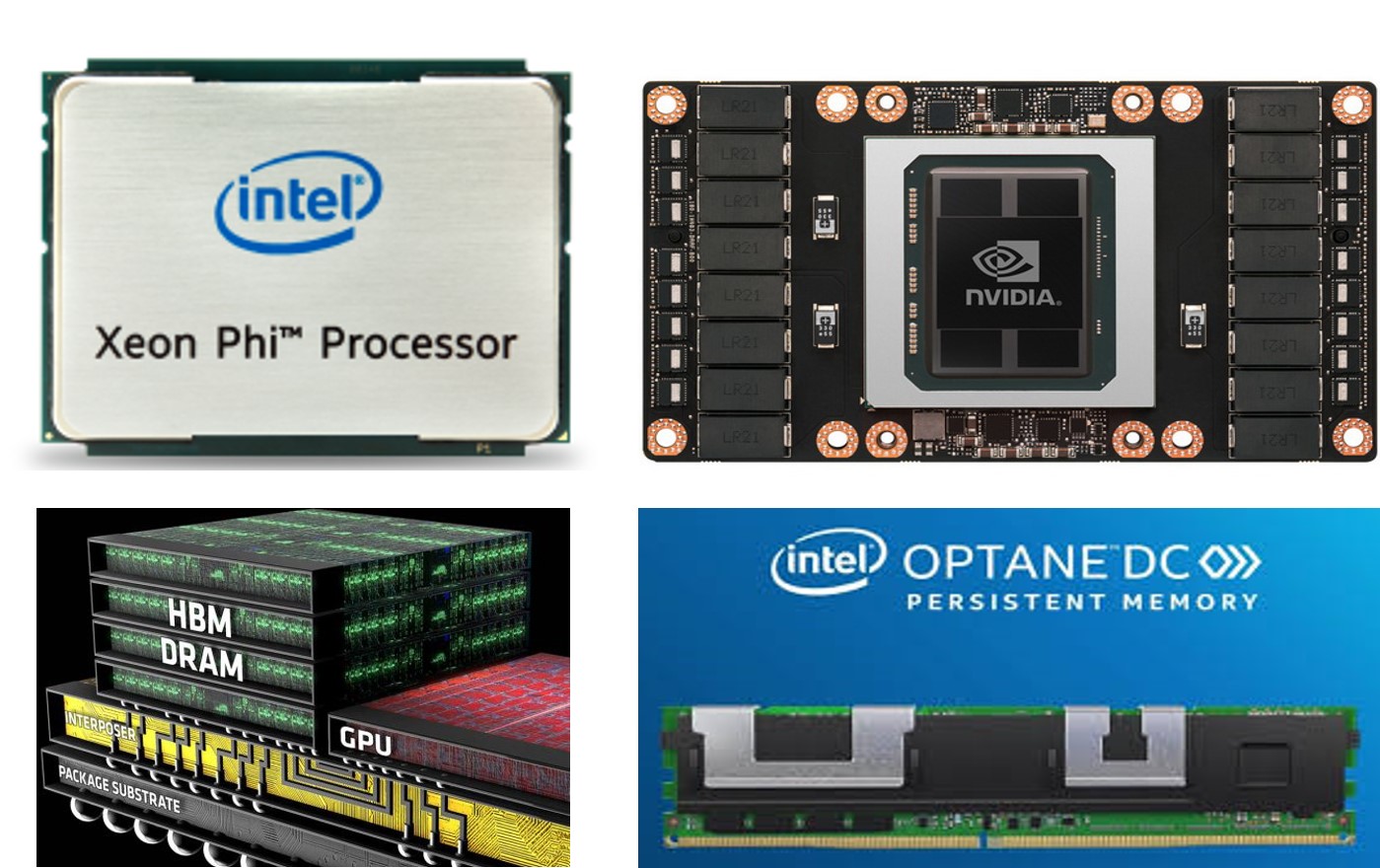

Heterogeneous computing and memory systems constitute a dynamic research area at the intersection of hardware architecture, system design, and software optimization. In this field, researchers explore the integration of diverse processing elements, such as CPUs, GPUs, FPGAs, and accelerators, into a cohesive computing system. They investigate techniques for efficiently orchestrating workload distribution and task scheduling across heterogeneous components to exploit their complementary strengths and maximize overall system performance. Moreover, researchers delve into the design of memory subsystems tailored to the diverse requirements of different processing units, including fast access for compute-intensive tasks, large capacity for data storage and retrieval, and low-latency communication between components.

Furthermore, research in heterogeneous computing and memory systems encompasses the exploration of novel memory technologies and hierarchies to address the growing demand for data-intensive applications. This involves investigating emerging memory technologies like non-volatile memory (NVM), high-bandwidth memory (HBM), and hybrid memory cube (HMC), and integrating them into heterogeneous computing platforms to optimize memory access patterns and reduce data movement overhead. Additionally, researchers explore memory management strategies, such as cache coherence protocols and memory tiering techniques, to efficiently utilize available memory resources and minimize latency in accessing data across heterogeneous components. By advancing heterogeneous computing and memory systems, researchers aim to enable scalable, energy-efficient solutions for a wide range of applications, including machine learning, scientific simulations, and big data analytics.

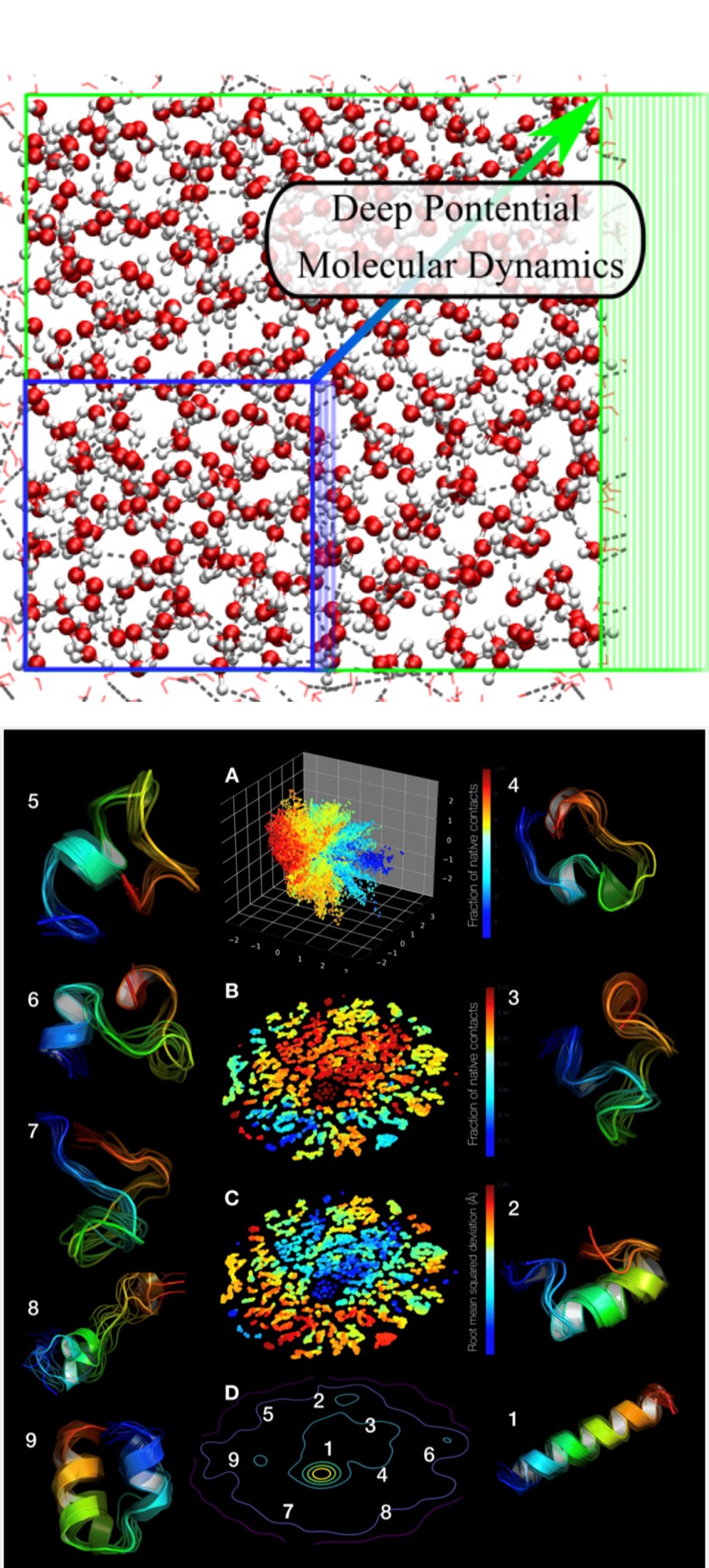

Scientific machine learning (SciML) is an interdisciplinary research field that combines principles from machine learning, computational science, and domain-specific knowledge to address complex scientific challenges. At its core, SciML seeks to develop machine learning models and techniques tailored to scientific applications, enabling the extraction of actionable insights from large-scale experimental and observational data. Unlike traditional machine learning, SciML prioritizes interpretability, uncertainty quantification, and domain-specific constraints, making it well-suited for tasks such as modeling physical systems, predicting complex phenomena, and optimizing experimental design.

In SciML, researchers employ a variety of machine learning approaches, including deep learning, Bayesian inference, symbolic regression, and reinforcement learning, to analyze and interpret scientific data effectively. These techniques are often customized and augmented with domain-specific knowledge to capture the underlying physics, chemistry, or biology of the studied systems. Moreover, SciML emphasizes the integration of uncertainty quantification methods, enabling scientists to assess the reliability and robustness of model predictions and to make informed decisions in the face of uncertainty. By combining machine learning with scientific principles, SciML facilitates the discovery of new scientific insights, accelerates the development of predictive models, and enhances our understanding of complex natural phenomena.

Furthermore, SciML plays a crucial role in advancing scientific discovery and innovation across various domains, including physics, chemistry, biology, climate science, and materials science. By leveraging machine learning techniques, scientists can efficiently analyze vast amounts of experimental and simulation data, identify hidden patterns, and formulate hypotheses for further investigation. Moreover, SciML enables the integration of data-driven models with domain-specific knowledge, leading to the development of hybrid models that combine the strengths of both approaches. Ultimately, by bridging the gap between machine learning and scientific research, SciML empowers scientists to tackle grand challenges, accelerate the pace of discovery, and pave the way for transformative breakthroughs in science and engineering.